At a time when computational resources seem abundant, there is much excitement around scaling up machine learning and training increasingly larger models on bigger datasets. Intelligent life, however, has arisen not from an abundance of resources, but rather from the lack of it. Evolution naturally selects systems that are able to do more with less. We can see many examples of such resource “bottlenecks” that helped shaped our development as a species: from the way the brain is wired, and how our consciousness is able to process abstract thought, to how we are able to convey abstract concepts to one another through drawings and gestures that developed into languages, stories, and culture. It is debatable whether such bottlenecks are a requirement for intelligence to emerge, but it is undeniable that our own intelligence is a result of resource constraints.

I am interested in studying how intelligence might have emerge from limited resource constraints. Below are a selection of projects that I had worked on over the years that is related to my interest. Some of the works illustrate machine learning concepts interactively inside of a web browser. Please read my blog for more information.

Selected Works

Collective Intelligence for Deep Learning: A Survey of Recent Developments

David Ha, Yujin Tang

Published in Collective Intelligence (2022)

Super excited to see ideas from complex systems such as swarm intelligence, self-organization, and emergent behavior gain traction again in AI research. So we wrote this survey of recent developments that combine ideas from deep learning and complex systems, and published it in the first issue Collective Intelligence, an open-access Journal co-published by SAGE and the Association for Computing Machinery (ACM).

EvoJAX: Hardware-Accelerated Neuroevolution

Yujin Tang, Yingtao Tian, David Ha

EvoJAX is a scalable, general purpose, hardware-accelerated neuroevolution toolkit. This library enables many different neuroevolution algorithms to work with neural networks running in parallel across multiple TPU/GPUs, achieving high performance by implementing the evolution algorithms, neural networks, and the tasks all in JAS. EvoJAX can run a wide range of evolution experiments within minutes on a TPU/GPU, compared to hours or days on CPU clusters.

Yujin Tang, David Ha

Presented at NeurIPS 2021 (Spotlight)

Reinforcement Learning agents typically perform poorly if provided with inputs that were not clearly defined in training. We present a new approach that enables RL agents to perform well, even when subject to corrupt, incomplete or shuffled inputs.

Modern Evolution Strategies for Creativity: Fitting Concrete Images and Abstract Concepts

Yingtao Tian, David Ha

Presented at NeurIPS 2021 Workshop on ML for Creativity and Design

We find that modern evolution strategies (ES) algorithms, when tasked with the placement of shapes, offer large improvements in both quality and efficiency compared to traditional genetic algorithms, and even comparable to gradient-based methods. We demonstrate that ES is also well suited at optimizing the placement of shapes to fit the CLIP model, and can produce diverse, distinct geometric abstractions that are aligned with human interpretation of language.

Slime Volleyball Gym Environment

A simple gym environment for benchmarking single and multi-agent reinforcement learning algorithms.

Neuroevolution of Self-Interpretable Agents

Yujin Tang, Duong Nguyen, David Ha

Presented at GECCO 2020 (Best Paper Award)

Agents with a self-attention bottleneck not only solve tasks from pixel inputs with only a few thousand parameters, they are also better at generalization.

Learning to Predict Without Looking Ahead: World Models Without Forward Prediction

C. Daniel Freeman, Luke Metz, David Ha

Rather than assume forward models are needed, in this work, we investigate to what extent world models trained with policy gradients behave like forward predictive models, by restricting the agent’s ability to observe its environment.

Collabdraw: An environment for collaborative sketching with an artificial agent

Judith E. Fan, Monica Dinculescu, David Ha

Presented at ACM Conference on Creativity & Cognition in 2019

A web-based environment for collaborative sketching of everyday visual concepts. We integrate an artificial agent (using Sketch-RNN), who is both cooperative and responsive to actions performed by its human collaborator. We find that collaboration between humans and machines encourages the creation of novel and meaningful content.

Weight Agnostic Neural Networks

Adam Gaier, David Ha

Presented at NeurIPS 2019 (Spotlight)

Inspired by precocial species in biology, we set out to search for neural net architectures that can already (sort of) perform various tasks even when they use random weight values.

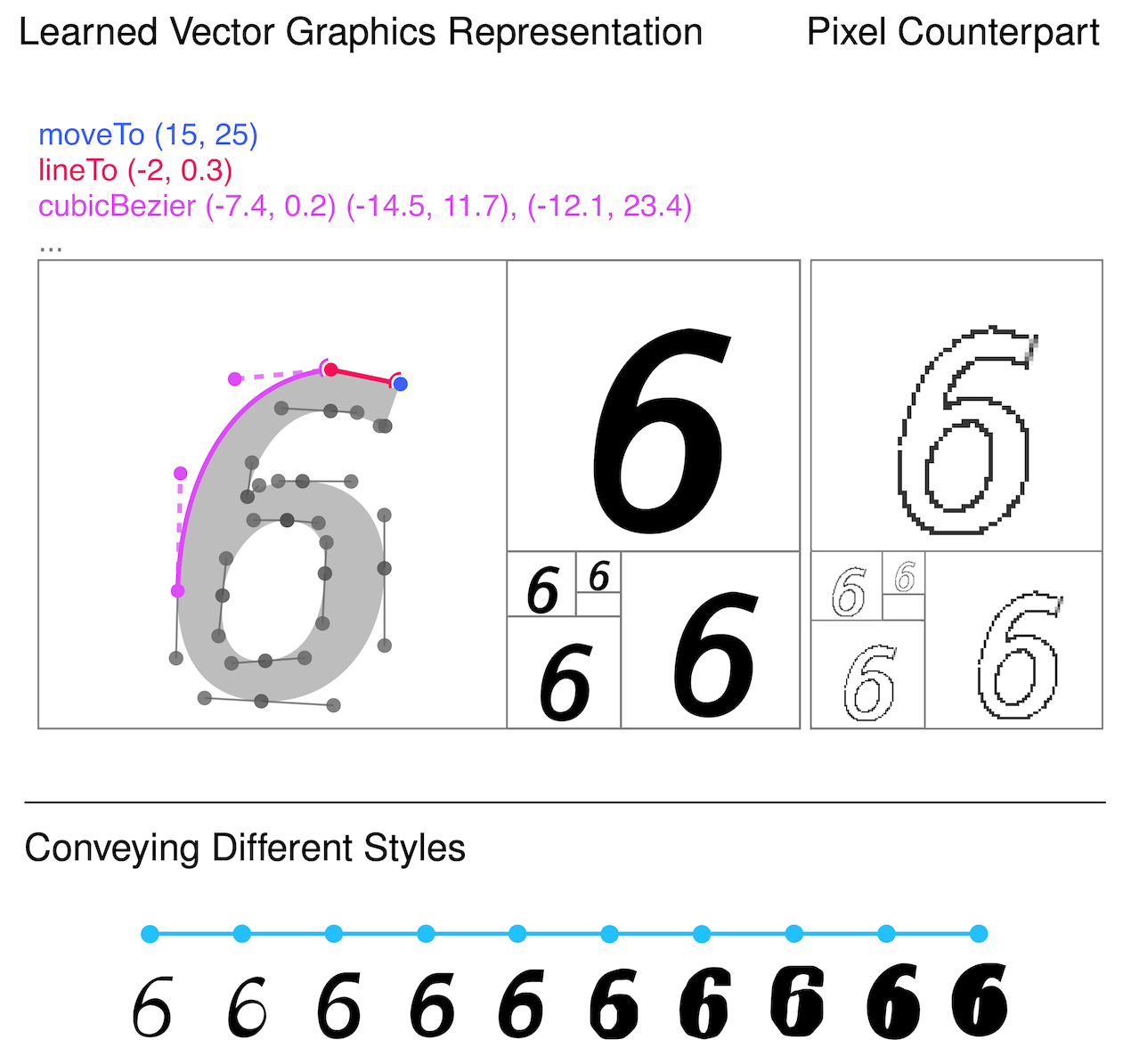

A Learned Representation for Scalable Vector Graphics

Raphael Gontijo Lopes, David Ha, Douglas Eck, Jonathon Shlens

SVG-VAE is a latent space model, just like MusicVAE and sketch-rnn. It learns a latent representation of the visual style of icons by training on their pixel rendering (the VAE). This lets us create palettes for blending and exploring icon styles in latent space.

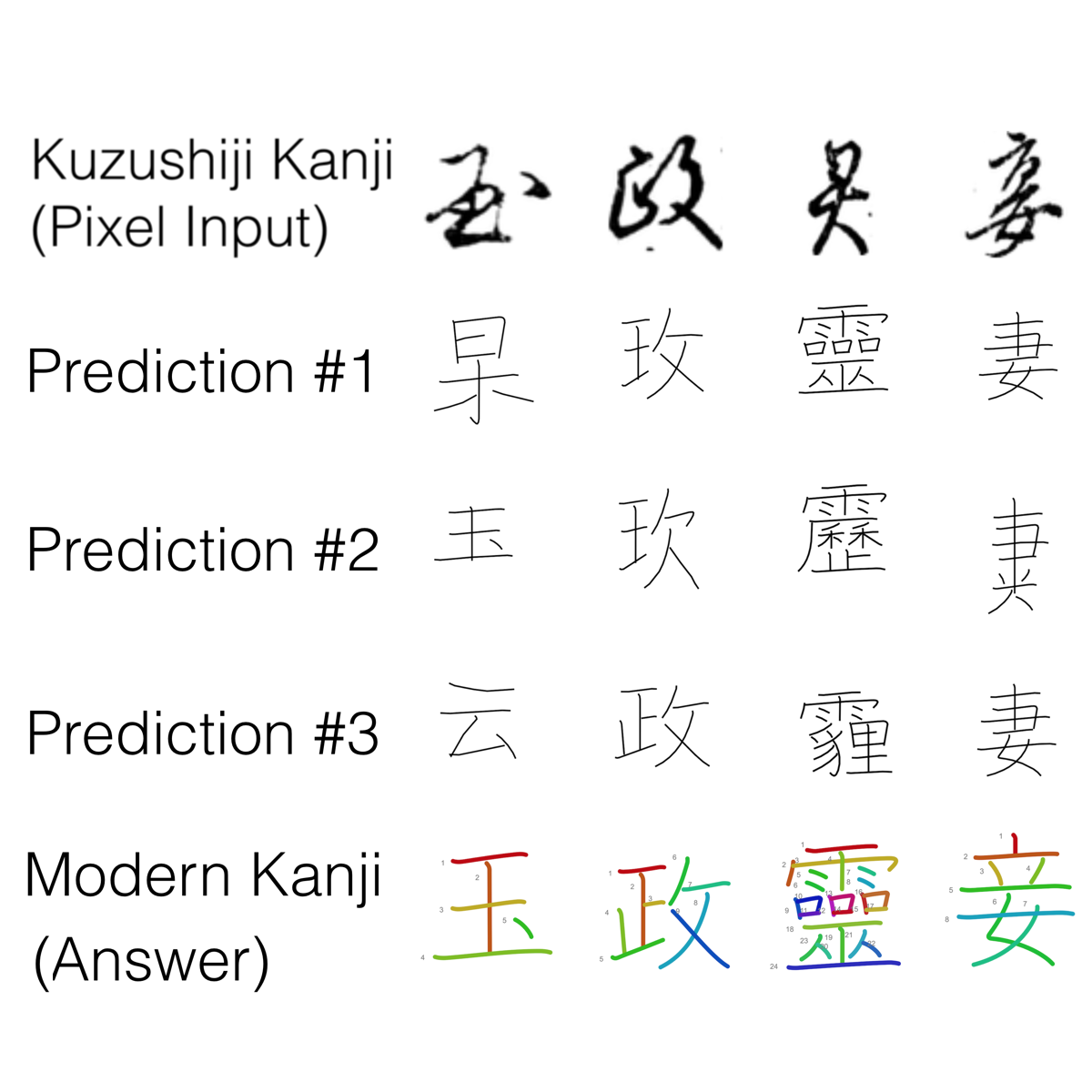

Deep Learning for Classical Japanese Literature

Tarin Clanuwat, Mikel Bober-Irizar, Asanobu Kitamoto, Alex Lamb, Kazuaki Yamamoto, David Ha

Presented at NeurIPS 2018 Workshop on ML for Creativity and Design

We introduce Kuzushiji-MNIST, a drop-in replacement for MNIST, plus two other datasets (Kana and Kanji). In this work, we also try more interesting tasks like domain transfer from Kuzushiji Kanji to modern Kanji.

Learning Latent Dynamics for Planning from Pixels

Danijar Hafner, Timothy Lillicrap, Ian Fischer, Ruben Villegas, David Ha, Honglak Lee, James Davidson

PlaNet learns a world model from image inputs only and successfully leverages it for planning in latent space.

Reinforcement Learning for Improving Agent Design

Published in Artificial Life, Fall 2019

What happens when we let an agent learn a better body design together with learning its task?

Pommerman: A Multi-Agent Playground

Cinjon Resnick, Wes Eldridge, David Ha, Denny Britz, Jakob Foerster, Julian Togelius, Kyunghyun Cho, Joan Bruna

NeurIPS Competitions held in 2018 and 2019

Presented at AAAI 2019 Workshop on RL in Games

We created Pommerman, a multi-agent environment based on the classic Bomberman game, with the aim of advancing the state of multi-agent RL research. It consists of a set of scenarios, each having at least four players and containing both cooperative and competitive aspects.

David Ha, Jürgen Schmidhuber

Presented at NeurIPS 2018 (Oral Presentation)

A generative recurrent neural network is quickly trained in an unsupervised manner to model pixel-based RL environments through compressed spatio-temporal representations. The world model's extracted features are fed into compact and simple policies trained by evolution. We also train our agent entirely inside of an environment generated by its own internal world model, and transfer this policy back into the actual environment.

In this article, I look at applying evolution strategies to reinforcement learning problems, and also highlight methods we can use to find robust policies.

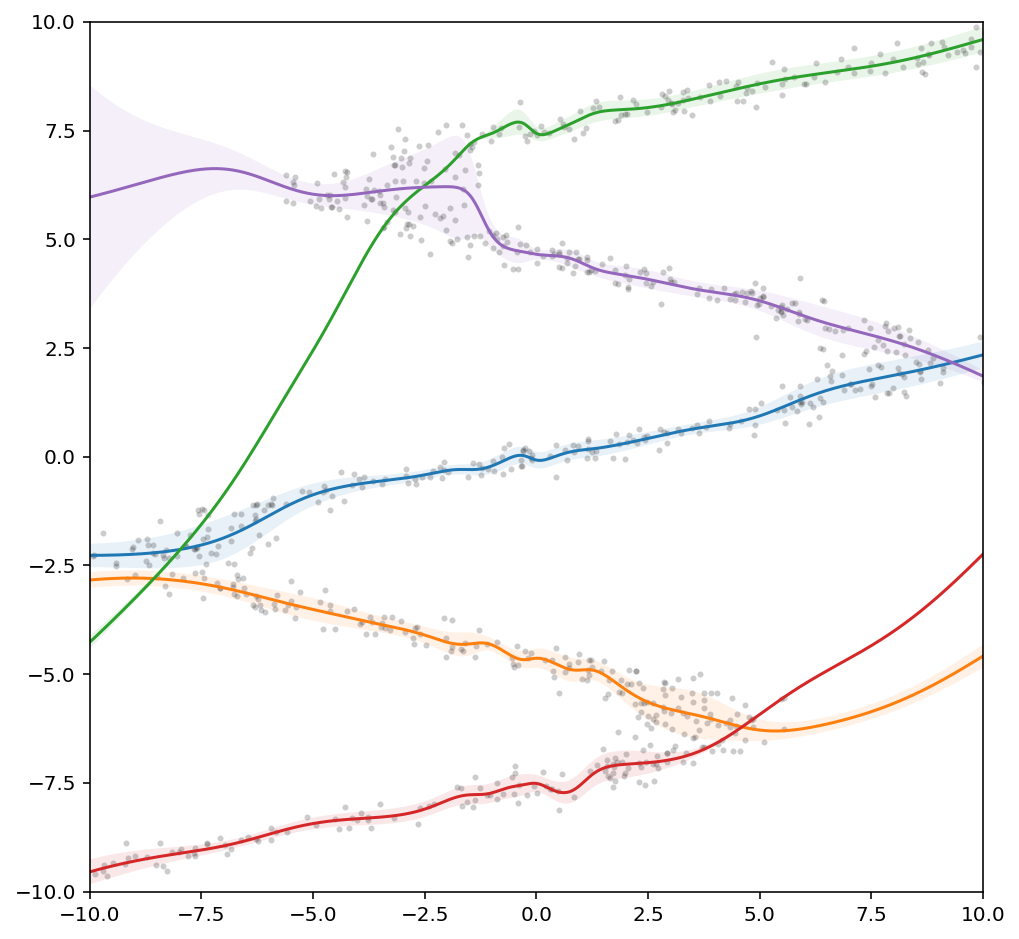

A Visual Guide to Evolution Strategies

I explain how evolution strategies using a few visual examples. I try to keep the equations light, but provide links to original articles. This is the first post in a series of articles, where I plan to show how to apply these algorithms to a range of tasks from MNIST, Gym, Roboschool to PyBullet environments.

A Neural Representation of Sketch Drawings

David Ha, Douglas Eck

We present sketch-rnn, a generative recurrent neural network capable of producing sketches of common objects, with the goal of training a machine to draw and generalize abstract concepts in a manner similar to humans.

Demos QuickDraw | Kanji | Flow Charts | Paint

Recurrent Neural Network Tutorial for Artists

An overview of recurrent neural networks, intended for readers without any machine learning background. The goal is to show artists and designers how to use a pre-trained neural network to produce interactive digital works using Javascript and p5.js library.

David Ha, Andrew M. Dai, Quoc V. Le

This work explores an approach of using one network, known as a hypernetwork, to generate the weights for another network. We apply hypernetworks to generate adaptive weights for recurrent networks. In this case, hypernetworks can be viewed as a relaxed form of weight-sharing across layers.

Neural Network Evolution Playground with Backprop NEAT

I combined NEAT with the backpropagation algorithm and created demos for evolving efficient, but atypical neural network structures for common ML toy tasks such as classification and regression.

Demos Classification | Regression

Generating Large Images from Latent Vectors

I describe a generative model that can output arbitrarily large images, by combining a CPPN with VAE and GAN model. A demonstration of this model trained on MNIST but generates hi-res MNIST digits at 1080p resolution.

Recurrent Net Dreams Up Fake Chinese Characters in Vector Format

In this project, I use recurrent neural networks to model Chinese characters. The model I constructed is trained on stroke data from a Kanji dataset. We can use this model to come up with “fake” Kanji characters.

I implement Bishop's 1994 Mixture Density Networks model in Javascript and Python. This multimodal distribution is used many times in my later work.

Demos Javascript | JAX Notebook

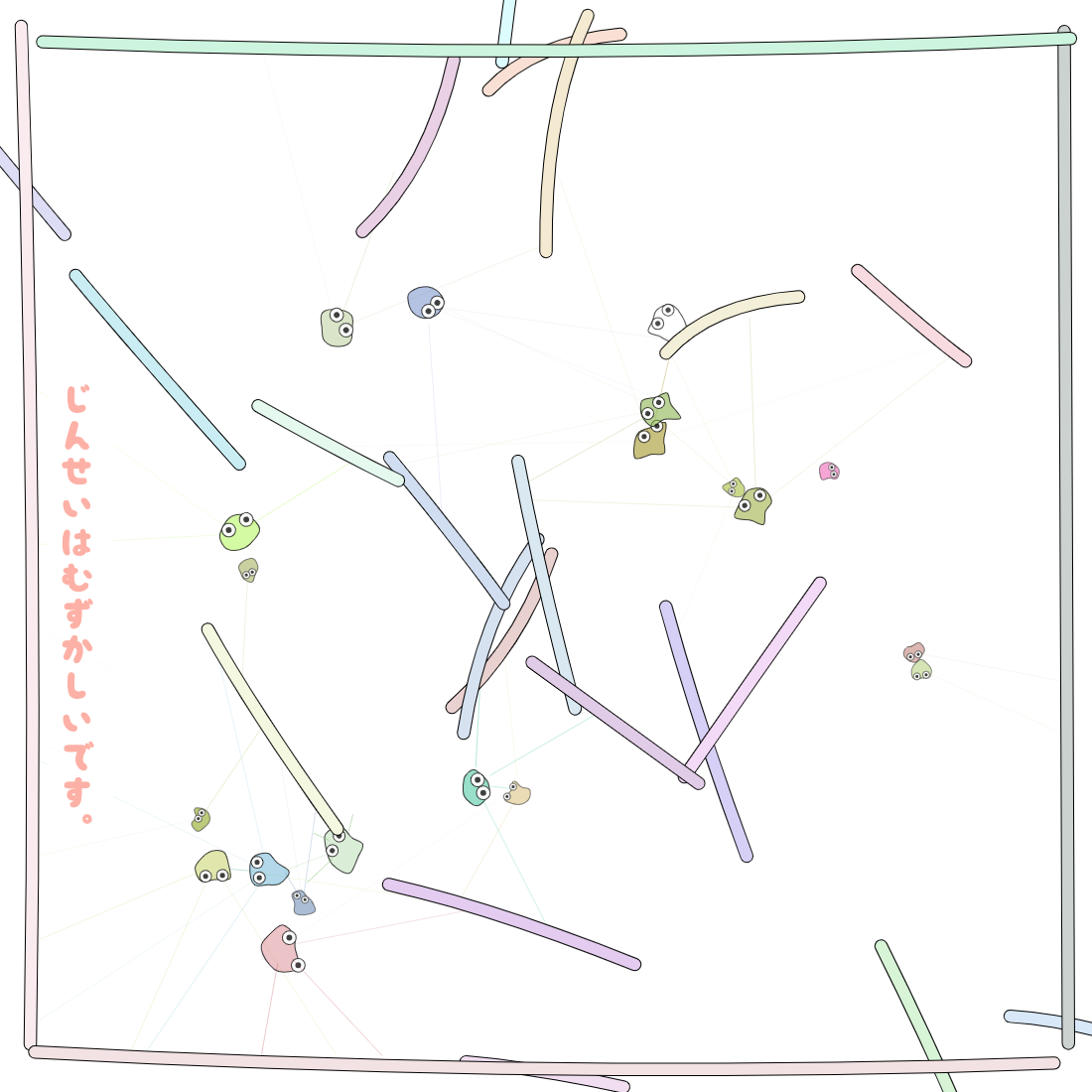

Each creature, controlled by a unique recurrent neural network brain, dies after contact with a plank. After some time, they reproduce by bumping into each other, passing on a version of their brain to future generations. Over time, they evolved a tendency to avoid planks and also attract each other.

Slime agents with tiny recurrent neural network brains trained to play volleyball by self-play using neuroevolution.

I evolved a small recurrent neural network to swing up and balance an inverted double pendulum, a non-trivial task when compared to the single pendulum swing up task. The whole project was done in Javascript using Box2D physics and the interactive demo works inside a web browser.